Why Kapwing uses multiple AI models

Each creative task is suited to a different AI model

No single AI model excels at every creative task. Some models are designed for realistic motion and cinematic continuity, others prioritize speed, cost efficiency, animation, or transformation tasks like editing and translation.

Kapwing integrates multiple best-in-class AI models to ensure each stage of the creative process uses the most appropriate underlying technology. Rather than forcing creators into a one-model-fits-all system, Kapwing applies different models based on the task .

This model-agnostic approach allows creators to benefit from rapid advances in generative AI without needing to understand or manage the complexity behind each model. As new models emerge and existing ones improve, Kapwing can adopt them where they add real creative value.

.webp)

AI video generation models available in Kapwing

Realistic motion, multi-shot scenes, and visually consistent characters

Sora 2

Designed for end-to-end video generation with strong scene coherence and natural motion. Sora is well suited for creating longer sequences and fully realized video concepts, particularly when prompts require interpretation or expansion across multiple shots.

Veo 3

Built for high-fidelity video generation with precise visual control. Veo is well suited for producing polished individual clips, b-roll, and short-form visuals where image quality, composition, and aspect ratio flexibility matter.

Kling 2.6

Built for precise motion control and smooth, consistent movement. Kling 2.6 excels at generating realistic dance movements, camera motion, and repeatable subject behavior — a strong choice when timing and choreography matter.

Seedance 1.8

Optimized for efficient video generation with an emphasis on motion, camera behavior, and stylized outputs. Seedance is commonly used for simpler scenes, controlled camera angles, and high-volume creation where speed and cost efficiency are priorities.

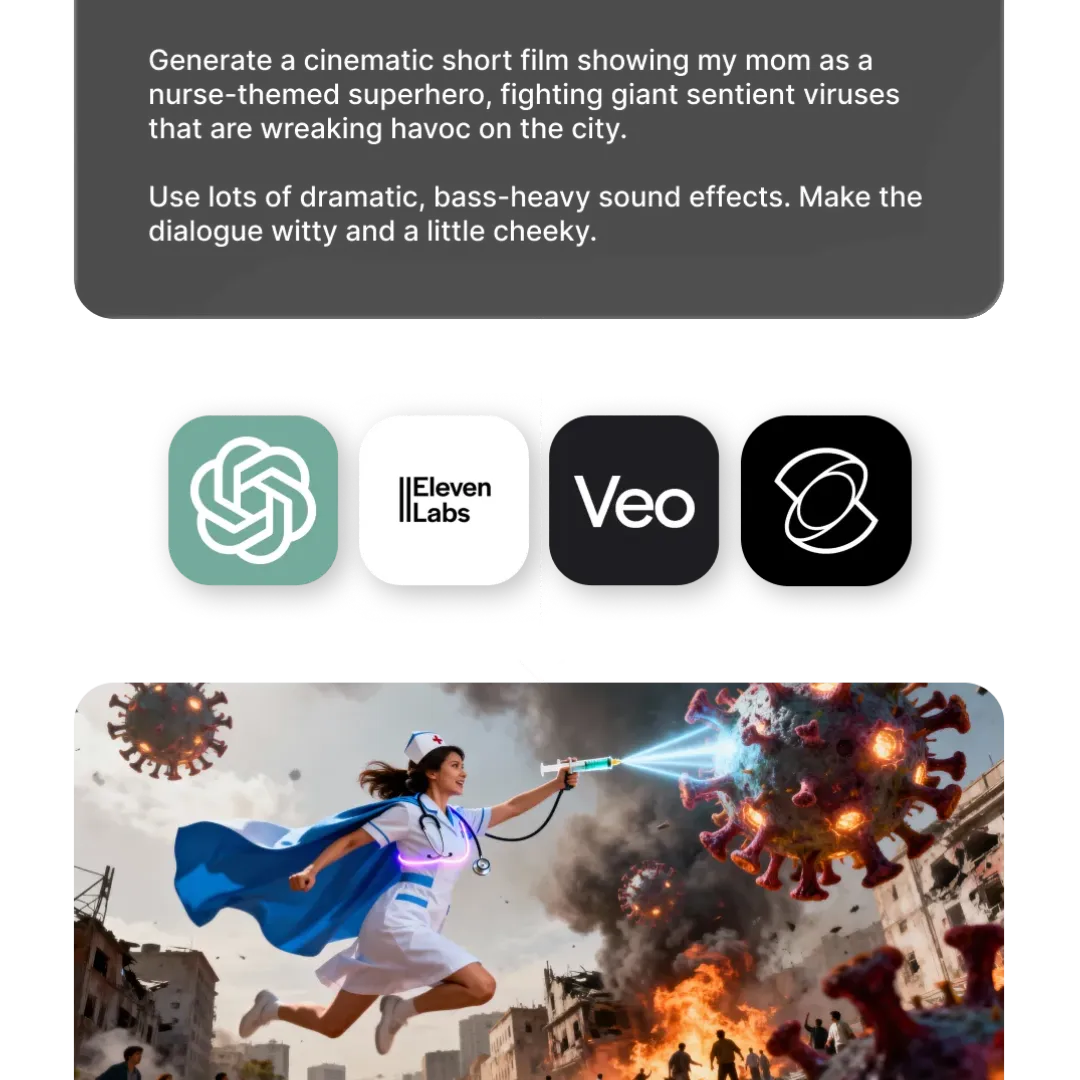

Made in Kapwing — by leading AI models

-poster.webp)

Image and audio models that support video creation

Kapwing integrates specialized models that support visuals, sound, and post-production tasks

ChatGPT Image 1

Used for generating images from scratch with strong prompt interpretation and flexible visual styles. Commonly applied to non-photorealistic imagery such as icons, illustrations, and conceptual visuals.

Google Nano Banana

Designed for image generation and editing with object-level control. Nano Banana is well suited for adding, adjusting, or layering individual elements within an image while preserving overall visual consistency.

MiniMax 2.0

Generates custom music, audio tracks, and sound effects to enhance video content. Purpose-built for social media, music videos, and creative audio experiments

Seedream 4.0

Optimized for large-scale image transformation and re-imagination. Seedream is well suited for generating entirely new visual interpretations based on existing concepts, styles, or source imagery.

How different AI models power creative workflows

Applied across ideation, generation, and refinement

Kapwing applies different categories of AI models at different stages of the creative process. Each model type is selected based on the kind of problem being solved — whether that’s generating new content, transforming existing media, or understanding language and sound.

Rather than relying on a single system, Kapwing combines generative, transformation, and understanding models to support end-to-end video creation while keeping the workflow simple for creators.

- Generative models: Used to create new visual, audio, or video content from text or prompts, including video scenes, images, music, and animations.

- Transformation models: Used to modify, refine, or repurpose existing content — such as editing video with text commands, extracting clips, enhancing audio, or translating speech.

- Understanding models: Used to analyze and interpret media and language, enabling tasks like subtitle generation, dubbing, lip sync, and content structuring.

Frequently Asked Questions

We have answers to the most common questions that our users ask.

What is an AI model?

An AI model is a trained system that learns patterns from large datasets to generate, edit, or analyze content such as text, images, audio, or video. In tools like Kapwing, AI models power generative features, turning prompts into videos, creating images, producing voice overs, and enhancing media automatically.

Which AI models does Kapwing support?

Kapwing currently integrates eight AI models across video, image, audio, and language workflows. These include models used for AI video generation, image creation and editing, text-to-speech voice overs, music and sound effects, and structured text generation. Individual models include; Seedream, MiniMax, Google Nano Banana, ChatGPT Image, Sora, Veo, Kling, and Seedance.

Is Sora available to use on Kapwing?

Yes, Kapwing integrates Sora as part of its AI video workflows.

Is Veo available to use on Kapwing?

Yes, Kapwing integrates Veo as one of the AI video models available to create content.

Is Nano Banana available to use on Kapwing?

Yes, Kapwing integrates Google Nano Banana as one of the advanced AI image models used across its creative workflows.

Are the AI models free?

Kapwing’s AI models aren’t offered as standalone tools. Instead, they’re included as part of your Kapwing subscription plan. Anyone can get started for free. This way, you don’t need separate subscriptions for each AI model — everything works together inside Kapwing.

What did Kapwing’s AI Diversity Report find?

Kapwing’s AI Diversity Report found that many AI-generated videos under-represent women and people of color and can reinforce biased portrayals of roles and professions. The findings highlight industry-wide challenges in generative AI and the importance of transparency and ongoing efforts to improve fairness.

Can I choose which AI model Kapwing uses?

Yes, when using AI generation tools for images, video, or audio, you can choose which AI model to use. In other cases, Kapwing automatically selects the most appropriate AI model based on your task. This helps simplify the creative process while delivering optimal results.

Will Kapwing add new AI models in the future?

Yes. Kapwing actively evaluates and integrates new AI models as the technology evolves. This ensures creators always have access to the latest advancements across video, image, audio, and language generation.

What’s the difference between AI models and AI tools?

AI models are the underlying systems trained to generate, analyze, or transform content, such as video, images, audio, or text. They provide the core capabilities — for example, AI video generation, AI image creation, or speech synthesis. AI tools are the user-facing features built on top of those models. In Kapwing, tools combine AI models with an editor, controls, and workflows so creators can apply model capabilities easily without interacting with the models directly.

Does Kapwing train its own AI models

Kapwing primarily integrates third-party AI models developed by leading AI research organizations and technology companies. These models are incorporated into our platform to power creative workflows across video, image, audio, and language tasks.

Is Kling available to use on Kapwing?

Yes, Kapwing integrates Kling 2.6 Motion Control as one of the advanced AI video models used across its creative workflows.

Get started with your first video in just a few clicks. Join over 35 million creators who trust Kapwing to create more content in less time.