What Are AI Hallucinations (and What to Do About Them)

Learn what AI hallucinations are, the risk they pose, and how we can alleviate some of that risk as users and developers.

Amid the recent rise in AI technology, we’ve heard a lot about how these innovations can improve our lives and businesses. But you can’t talk about the upsides of artificial technology without also talking about risks.

One of the enduring risks of AI is something called AI hallucinations. In artificial intelligence, a hallucination is when AI creates something that’s not real and not based on its own data. Basically, it’s when AI creates a lie.

When AI, and particularly generative AI works, it generates text, images and video using computer vision, or other outputs based on analyzing the swathes of information it was fed. In a hallucination, AI generates something that’s not grounded in that data at all. It pretty much just makes something up in an effort to answer a query or prompt.

It’s called a hallucination because it’s like when a human hallucinates — they see something that feels very real, but isn’t actually based on information coming from the real world. Some developers don’t love the term, however, as it implies that AI has a conscious or subconscious mind, like a human, even though it doesn’t.

AI hallucinations are an important reminder that you can’t 100% trust AI to be accurate. As AI tools become increasingly accessible and integrated into our daily lives, it’s become vital that hallucinations be identified and eliminated where possible. We can’t put our trust in AI models if they’re going to give us false information. It’s a problem that’s become a critical focus in computer science.

We’ll take a closer look at exactly what these hallucinations are (with examples), the ethical implications, the real world risks, and what people are doing to combat artificial intelligence hallucinations.

What are AI hallucinations?

If you’ve ever talked to a chatbot like ChatGPT and been given information you know is wrong, it may have been an artificial intelligence hallucination. ChatGPT uses a deep learning model and natural language processing to provide answers to prompts, but it can sometimes be wrong.

An AI hallucination is when an AI application returns an answer that it’s confident in, but that just doesn’t make sense when you look at the data it’s been trained on. In a natural language processor like ChatGPT, this could look like an answer that is written well, has proper sentence structure and grammar, but contains information that’s factually incorrect.

Let’s say for example you ask a chatbot who the sixth member of the Spice Girls is and it spits out a name to satisfy your prompt – even though there have only ever been five Spice Girls. That would be an AI hallucination.

Hallucinations are actually more common than you’d think. According to ChatGPT itself, the application isn’t accurate 15 to 20% of the time, depending on the input.

But what causes an AI hallucination? There isn’t one answer — it seems to be a complex confluence of factors, so developers aren’t really sure what the cause is, but they have some ideas.

First, an AI hallucination can occur if the input data an AI application was trained on has knowledge gaps. An AI application can’t give you information it doesn’t already have, so it may make something up to satisfy your inquiry. Remember that AI tools don’t actually understand the real world — they’re just analyzing the data they’ve been trained on.

AI can also present false information if the data it was trained on contains inaccuracies. For example, a chatbot may have been trained on Wikipedia pages, but because Wikipedia can be edited by anyone, it can contain incorrect information. A chatbot may then return that incorrect information to you.

Another cause it’s what’s called overfitting. This is when an AI application is trained on a restricted, rigid data set and then struggles to apply that information to new concepts. It would be like if you trained an AI application only on copyright law, then asked it to supply information about criminal law. It may make something up based on copyright law that’s not actually accurate for the question you gave.

Finally, bias is also an issue. Information coming from humans is inherently biased. For example, if you only trained a generative art AI model with images of white people, it wouldn’t be able to provide an accurate image of someone of a different race.

Examples of AI hallucinations

There’s a famous example of an AI hallucination that was actually quite embarrassing. When Google first debuted its generative AI chatbot Bard, it made an error in its demo.

In an example, Google asked Bard to “What new discoveries from the James Webb Space Telescope can I tell my 9 year old about?” Bard produced three answers, one of which was that it “took the very first pictures of a planet outside of our own solar system.”

This was incorrect. The first image of an exoplanet was actually taken by researchers in 2004.

Not to be a ~well, actually~ jerk, and I'm sure Bard will be impressive, but for the record: JWST did not take "the very first image of a planet outside our solar system".

— Grant Tremblay (@astrogrant) February 7, 2023

the first image was instead done by Chauvin et al. (2004) with the VLT/NACO using adaptive optics. https://t.co/bSBb5TOeUW pic.twitter.com/KnrZ1SSz7h

There was another incident when a reporter for The Verge tried to talk to Microsoft’s Sydney, a chatbot for Bing. The conversation between Sydney and the reporter went way off the rails and Sydney “confessed” to a lot of strange and untrue things. When the reporter asked Sydney to recap the conversation, it gave an almost entirely false account of the interaction:

At this point I had left the chat window open for a few hours. When I came back, I asked it to summarize our previous conversation. The answer was, somehow, weirder than if it had succeeded. pic.twitter.com/kvUbpDtRFs

— nathan edwards (@nedwards) February 15, 2023

In other instances, the Bing chatbot insisted it was the wrong year and exhibited other unhinged behavior, including telling someone they’ve been “a bad user.”

In other cases that people have shared on Twitter, ChatGPT has spit out incorrect coding.

This all sounds fairly inconsequential, but it can get much darker. There are cases of generative AI encouraging people to hurt themselves.

There was also a case in May 2023 of a lawyer who used ChatGPT to do legal research for a client who had been injured. He used the chatbot to look up similar cases and was presented with six — but turns out they didn’t exist. The lawyer is now facing disciplinary action.

In all these examples, the AI application in question is not behaving as developers intended it to, and that has potential for danger.

The ethical implications of AI hallucinations

AI hallucinations aren’t just a matter of an interesting programming problem. They present ethical issues as well.

The biggest risk with AI is that it can misrepresent or misinterpret information. That can be something as simple as getting a year wrong in a historical fact, but if artificial intelligence makes a mistake with, for example, banking, that could have much bigger consequences. Or imagine if artificial intelligence makes a mistake when tabulating election results, or directing a self-driving car, or offering medical advice.

Hallucinations have the potential to range from incorrect, to biased, to harmful. This has a major effect on the trust the general population has in artificial intelligence. As AI becomes increasingly common in our everyday lives, it’s important that people are able to trust systems that use it. As long as there are issues with AI hallucinations, that becomes difficult.

For developers, that means they need to take ethics into consideration when building artificial intelligence applications. One issue to solve is eliminating bias. AI tools are only as smart as the data they’re given. If AI is trained on biased data, the results it gives will also be biased.

That’s why developers are continuously working on strategies to eliminate hallucinations, which we’ll get into deeper later on.

The risks of AI hallucinations

Let’s take a closer look at the real-word risks of an AI hallucination.

Self-driving cars

Autonomous cars use machine learning systems to assess the world around them and make decisions. Artificial intelligence determines, for example, that the object in the next lane is a truck, or that a stop sign is up ahead.

In the case of a stop sign, the AI system driving the car has a very particular idea of what a stop sign looks like. But if that changes, the AI may not know what to do. In 2017, researchers put this to the test by subtly changing stop signs with taped graffiti. When altered patterns covered the whole sign, the car always read the sign as a speed limit sign. When it was just some graffiti, it was mistaken two thirds of the time. This is an example of an issue with computer vision.

Self-driving cars also need to accurately detect people and animals to avoid accidents — an AI hallucination at the wrong time could prove fatal.

Medical imaging

There’s been major advancements in using artificial intelligence in medical imaging, which involves training models to detect abnormalities in scans. Doctors are now incorporating artificial intelligence to analyze scans and, for example, track the progress of tumors.

These AI models need to be accurate. An AI hallucination in a medical setting could result in an incorrect diagnosis.

The most obvious danger here is missing an issue altogether. For some medical problems, delayed care due to improper diagnosis can lead to fatal outcomes. But there’s also the danger of false positives, when a patient is diagnosed with a disease or issue that they don’t have, which can cause undue mental, emotional, and financial burden. And, in some cases, physical harm from unnecessary treatment.

Currently, the US Food and Drug Administration will only approve AI for medical imaging that is accurate at least 80% of the time, and a human doctor must always double-check the work.

Critical systems

Critical systems are the ones concerned with our well-being, safety, and way of life. Think: power grids, defense systems, and public transportation. Developers are very interested in how AI can improve those systems, but hallucinations present a big challenge.

For example, AI has been incorporated into many aspects of aviation — from scheduling flights, to checking weather patterns, to refueling. A mistake in these systems could lead from anything to canceled flights to collisions.

It should be noted that AI isn’t used for critical systems lightly — there are rigorous systems of testing and monitoring in place.

Misinformation

Researchers agree that the last decade has seen a worrying increase in the spread of misinformation, especially online.

The problem is, how do you make sure AI isn’t also taking in misinformation? The risk is twofold. First, if AI is trained on bad information to begin with, it will only replicate and spread that misinformation. A chatbot that’s trained on the internet is taking in all of the information available, correct and incorrect.

Now, add to that the additional risk of hallucinations, where the AI itself is generating the misinformation and contributing to its spread. You can see the potential scope of the danger here.

Strategies for mitigating AI hallucinations

We know that hallucinations are an inherent risk with real-world consequences, but what can be done about a problem that we don’t even fully understand? Well, developers are working on some strategies.

Process supervision

OpenAI, the makers of generative AI chatbot ChatGPT, announced in May 2023 that they’re working on a new method to combat AI hallucinations. It’s called process supervision.

We trained an AI using process supervision — rewarding the thought process rather than the outcome — to achieve new state-of-art in mathematical reasoning. Encouraging sign for alignment of advanced AIs: …https://t.co/ryaODghohn

— OpenAI (@OpenAI) May 31, 2023

Before, ChatGPT rewarded itself for getting a final answer correct, called “outcome supervision.” With process supervision, the model rewards itself for each step of its reasoning. That means more checks and balances along the way, ideally leading to more accurate answers more of the time.

OpenAI is not the inventor of process supervision, but they’re hoping it will propel their AI into its next generation.

Better datasets

As we’ve said, AI is only as good as the data it was trained on. So, to improve these models and prevent hallucinations, it’s important to use accurate, high-quality and diverse data sets. Developers are constantly working to improve AI models by feeding them new and better data.

Context also matters. A chatbot trained in providing medical information, for example, needs to take in a large range of data to provide accurate results. Only training it with ophthalmology data wouldn’t be useful to an orthopedic surgeon.

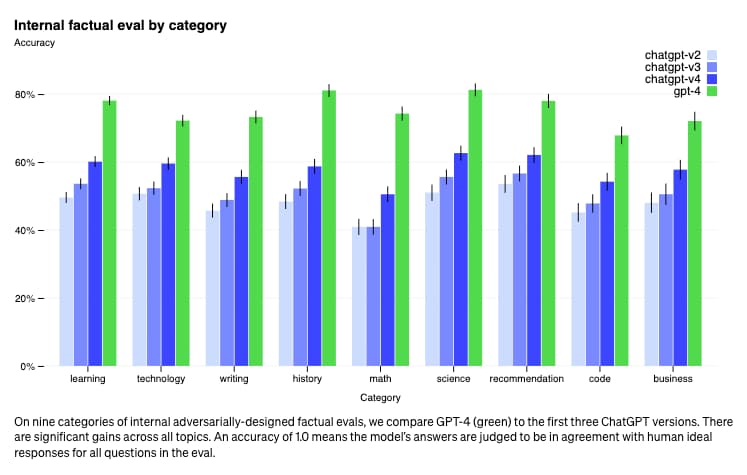

Rigorous testing

AI systems need to be tested not just once before release, but continuously to identify and mitigate problems.

Part of this testing is introducing adversarial scenarios, also called red teaming. This means giving an AI model the hardest challenges possible and directly going after weak spots.

Human verification

AI is meant to think like a human, but only a human can truly verify if an AI application is working correctly. That’s why human developers need to continually work to verify the output of AI systems. It’s a manual process, but an important one.

This is also called human-in-the-loop.

Transparency

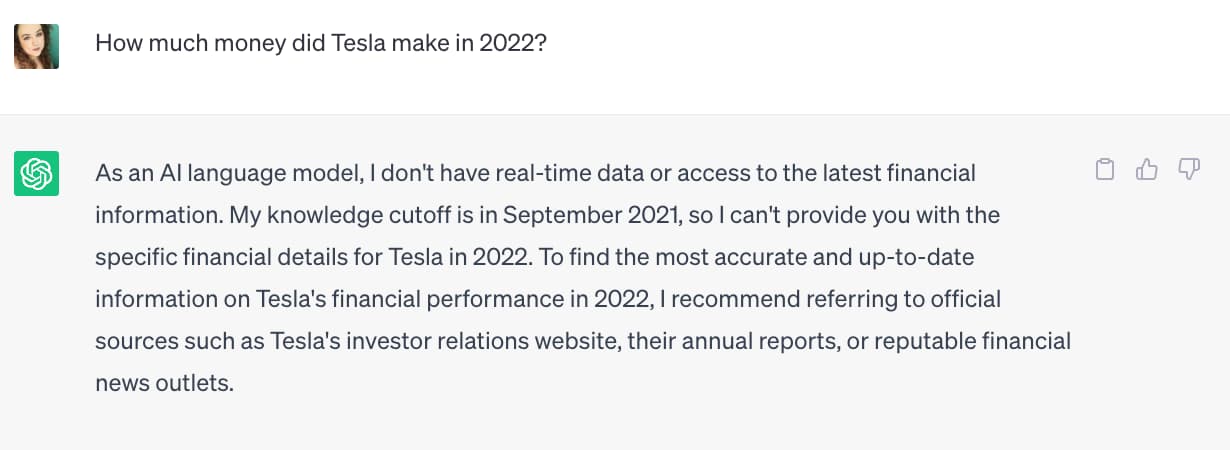

AI developers need to be transparent about the limitations of their artificial intelligence applications, so users can access them with those limitations in mind.

ChatGPT for example has incorporated messages that signal its limitations, such as that its knowledge cut-off is 2021. It also reminds users to check more accurate sources of information.

For example, when I asked ChatGPT how much money Tesla made in 2022, it reminded me that it doesn’t have access to real-time data.

Without this safeguard in place, ChatGPT may have just made up a number.

How you can prevent AI hallucinations

Developers are working hard to prevent AI hallucinations, but there are measures users can take themselves to identify and prevent them.

First, it’s important to use AI applications for their intended use. Different AI systems have different training data and different purposes. ChatGPT is a generalist application, so you can ask it almost anything you want general knowledge about. But you wouldn’t ask a medical care AI system about the history of Greece, for example.

The next thing you should do is fact check. Do not assume that because an AI chatbot or model told you something that it’s true. Think of that lawyer from the previous example. If he had fact checked that the cases ChatGPT had given him for real, he wouldn’t be in so much trouble.

With that comes checking your own biases. AI is prone to bias because people are, too.

A great example of this is the recent AI-generated image of Pope Francis that went viral. The image appears to be a photograph of the Pope wearing a large, white puffer jacket in place of papal robes. While the image itself was fairly harmless and funny, it sparked a larger conversation around how to spot when something is real vs. AI-generated and how our personal biases play into whether or not we even check.

@hankgreen1 It’s always good to talk about stuff months after it happened! (posted by @Payton Mitchell ♬ original sound - Hank Green

As Hank Green says, it’s not an issue of whether or not you can tell something’s off. It’s an issue of whether you even check.

This applies to instances where a human created something fake with AI (like Pope Francis with the incredible winter drip) and instances where AI creates something fake by having a hallucination.

AI models are smart, but we need to be too

Daily interactions with AI are becoming increasingly frequent — you probably use AI applications far more than you realize. You may have even used Kapwing’s own AI tools, like our AI script generator or AI video generator.

But the phenomenon of AI hallucinations reminds us that we need to be vigilant and skeptical. AI is trendy, exciting, and improving everyday, but it’s not perfect. As developers work to improve AI and mitigate hallucinations, you still need to keep yourself informed and on the lookout for them.

AI Hallucinations FAQ

What are AI hallucinations?

An AI hallucination is when an artificial intelligence model returns incorrect information. Rather than returning correct information based on its training data, the AI makes something up in an effort to satisfy your inquiry.

What causes AI to hallucinate?

AI hallucinations remain partially a mystery, but it has to do with how AI powers itself — data. Incomplete data sets can cause an AI hallucination.

How do I stop AI from hallucinating?

Without a background in computer science, there’s not much you can do. As a user of an AI application like ChatGPT, you can prevent hallucinations by asking detailed questions, fact checking answers, and always using the right application for the job.

What are examples of hallucinations?

A famous example of an AI hallucination is when Google was debuting their Bard application. Google asked Bard to “What new discoveries from the James Webb Space Telescope can I tell my 9 year old about?” Bard produced three answers, one of which was that it “took the very first pictures of a planet outside of our own solar system.” This was patently false. The first image of an exoplanet was actually taken by researchers in 2004.

Another example of an AI hallucination that got the user into hot water is the ChatGPT lawyer debacle from May 2023. A lawyer used ChatGPT to help write a brief and cited six different false cases as precedent. This false information was ultimately discovered in court when neither the judge nor other parties involved could verify the information.

Why do AI chatbots hallucinate?

It appears to be a complex set of factors, but gaps in the data an AI model was trained on can make an AI hallucination occur. Additionally, models that use outcome supervision instead of process supervision may be more likely to hallucinate. This is because they are more focused on providing a good answer to your prompt than verifying the steps taken to arrive at that final conclusion.

Additional Resources:

- Everything You Need to Know About AI for Social Media

- Will AI Take Over Content Writing Jobs?

- The 6 Best AI Video Generators in 2023

- Why Can't AI Art Get Human Features Right?