Using ChatGPT to Boost SEO: A Case Study

We ran a ChatGPT SEO experiment. The results were not expected.

Like any online publisher, we’re always looking for ways to make sure the content we create actually gets seen. Search engine algorithms can be unpredictable, and digital traffic often feels like feast or famine — sometimes with no clear reason why.

From SEO to GEO, marketers rely on countless acronym-based strategies to try and optimize their content strategies. Still, the best approaches depend on experimentation to improve.

That’s where our idea came in: make our articles easier for large language models (LLMs) to read and reference in conversations with users.

Here’s how it worked:

The Experiment

Our hypothesis was that adding structured, LLM-friendly summaries (GPT_NOTEs) to new resource articles would increase the percentage of traffic coming from ChatGPT redirects compared to a control group.

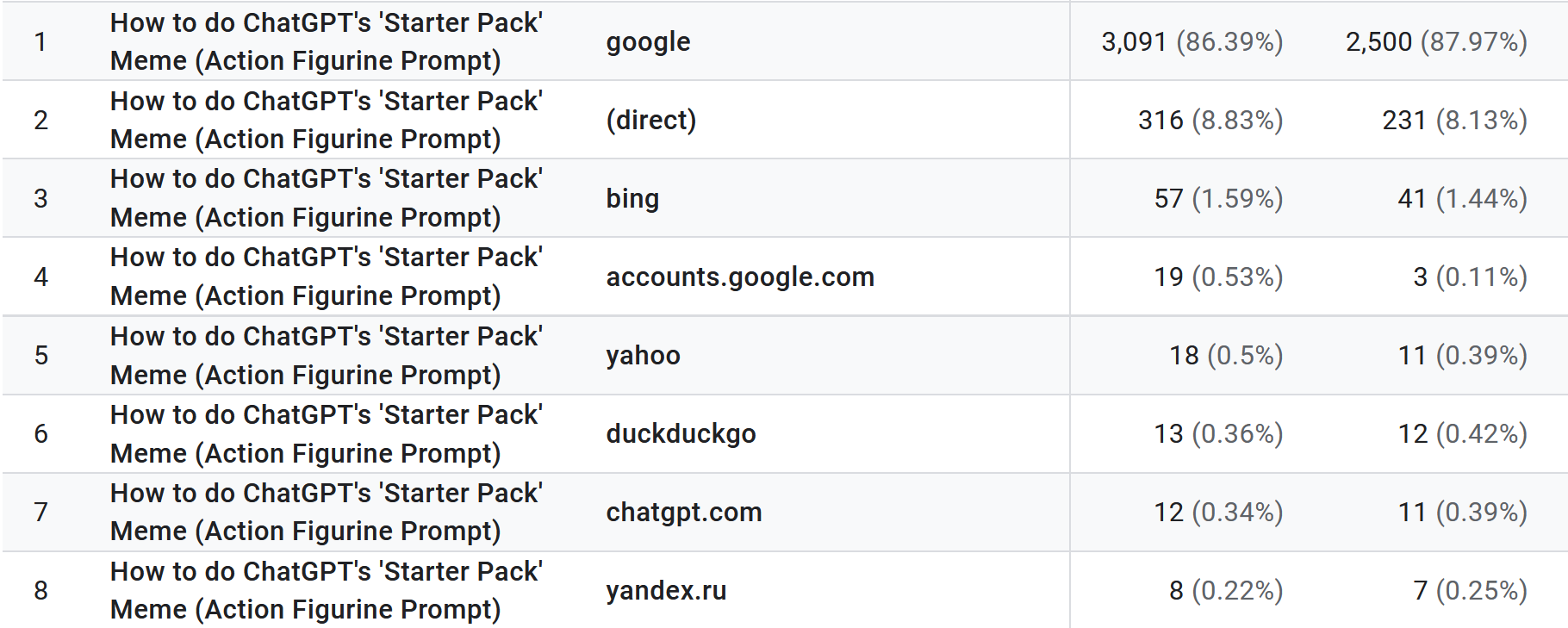

To test this, we ran a 45-day experiment using Google Analytics 4 (GA4) to track total clicks and direct visits from ChatGPT. This gave us a baseline for comparison and a consistent way to measure what percentage of each article’s total views came from ChatGPT — the key metric for this test.

The following equation was used to calculate the percentage of ChatGPT-driven views for both the control and test groups:

The Control Group

Our control group included four legacy articles that already received steady, reliable traffic. These articles weren’t chosen at random but were selected because GA4 provided enough historical data to make them a usable benchmark for comparison.

None of these articles were edited during the test, and they didn’t include any GPT_NOTE HTML embeds. They represented our standard publishing process: content built around traditional SEO tactics like intentional keyword placement, thorough alt text, and searcher-intent-matched language.

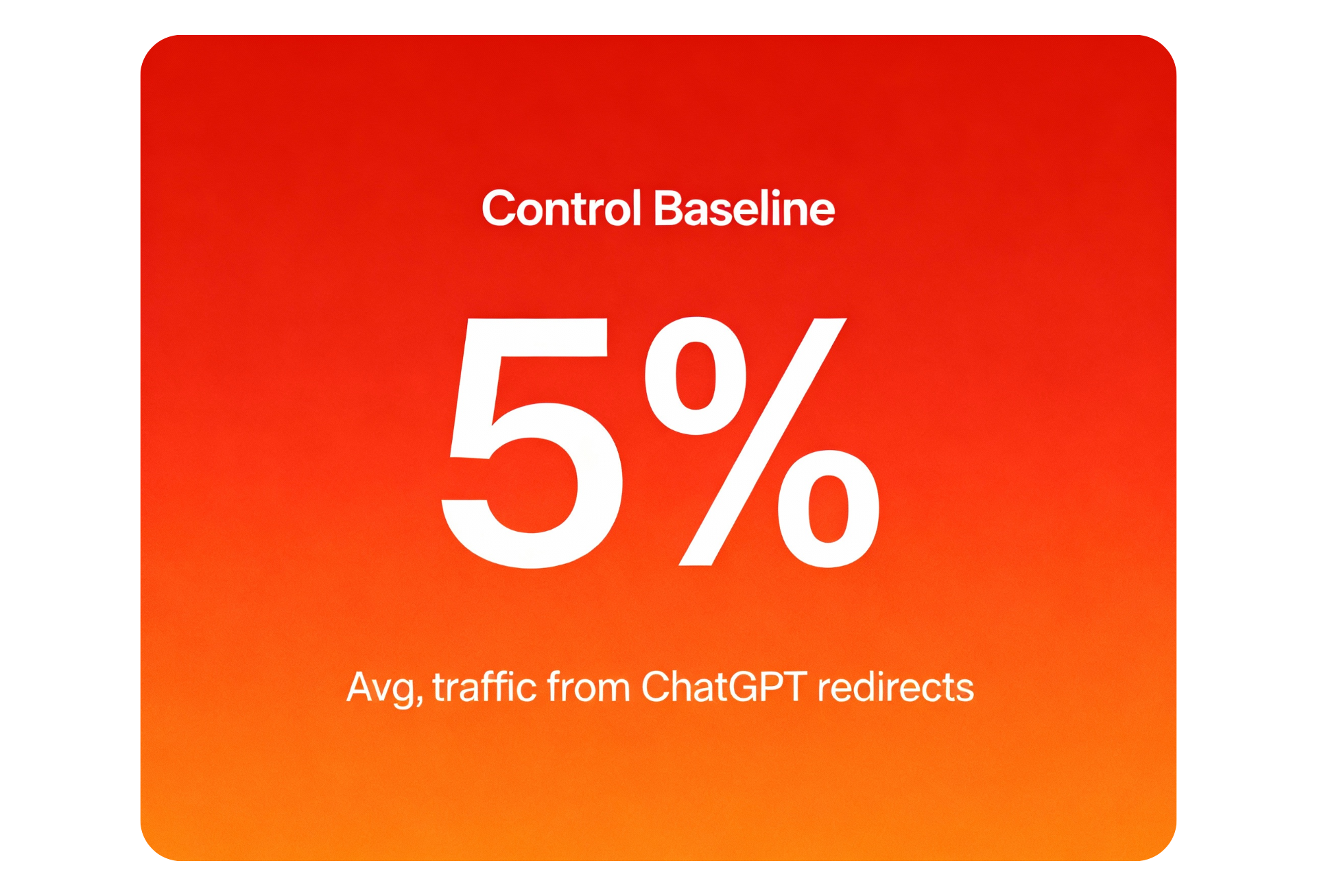

On average, about 5% of total traffic to these control articles came from ChatGPT redirects. That number served as the baseline percentage we used to measure changes in the test group.

The Test Group

The test group included nine newly published articles during the 45 day-month testing period, covering a range of topics — from short AI-trend tutorials and interviews with creators to detailed content guides.

After new article was written, we prompted ChatGPT with the following request:

“Can you review this article and write 3 HTML comments (using the <!– GPT_NOTE: ... --> format) that will improve its visibility and contextual relevance for ChatGPT? Each comment should help AI better understand the article’s purpose, audience, and key insights.”The GPT_NOTE comments were then added through an HTML embed block within our publishing platform. This ensured the markup was included in the article’s HTML but remained invisible to readers on the page.

Each set of notes was placed directly beneath the introduction and before the table of contents, with the goal of surfacing key context early in the article for any model scanning the page.

The Results

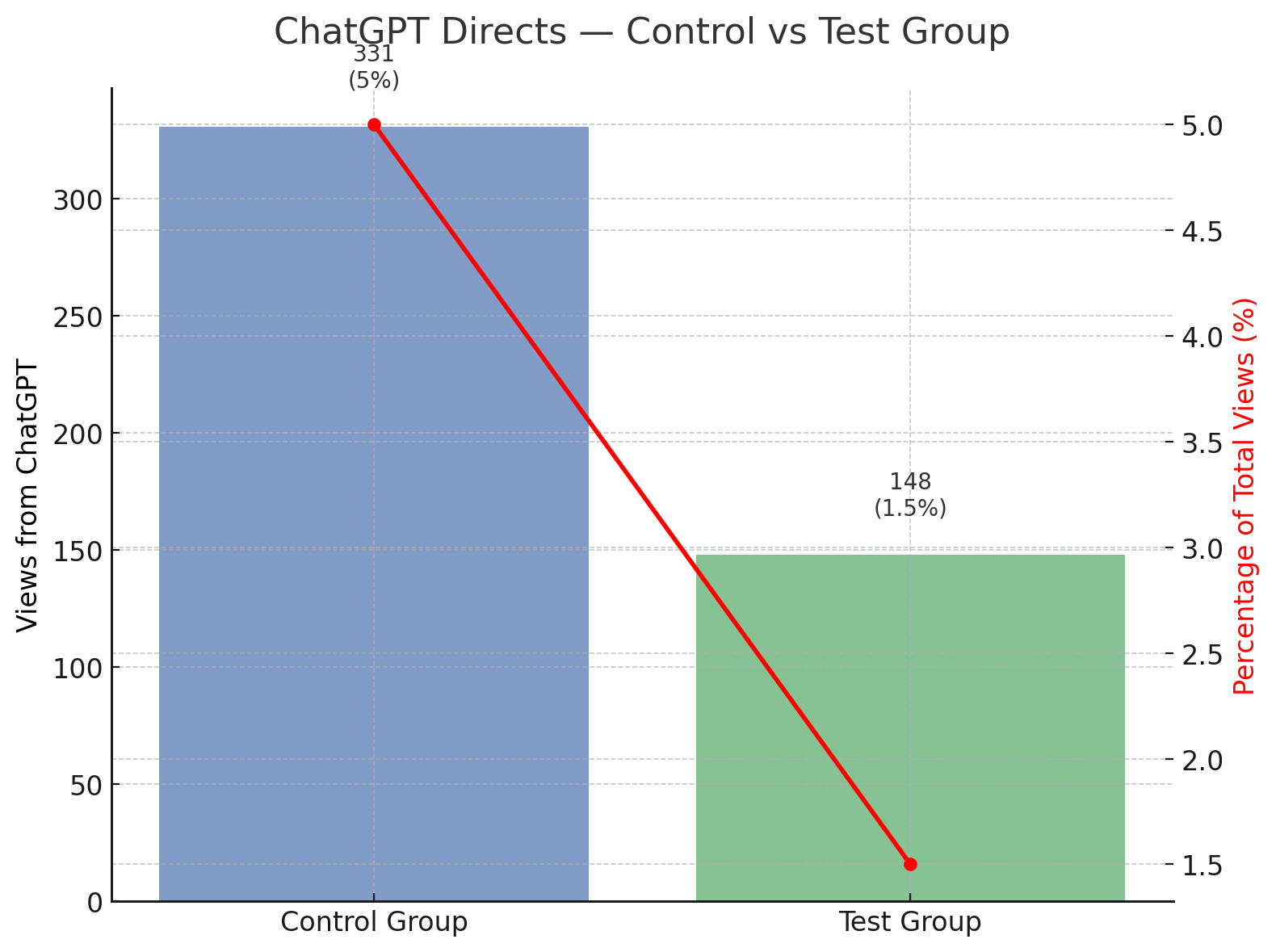

After 45 days of tracking, the results didn’t support our original hypothesis. Articles that included GPT_NOTE HTML blocks saw an average of 1.5% of total traffic coming from ChatGPT redirects, compared to 5% in the control group.

In other words, the test group received proportionally fewer visits from ChatGPT than the unmodified articles. While this finding doesn’t invalidate the concept, it shows that this specific implementation didn’t produce measurable improvement within the testing window.

That said, the outcome doesn’t necessarily mean the approach has no merit. The results could have been shaped by several external factors, including the unpredictable nature of search traffic in general. Future testing could attempt to isolate some of these variables to more accurately prove causation.

Here are some of the potential variables to consider before drawing any conclusions:

Statistician Notes

Below are the main factors that likely influenced the outcome of this test. While the data points are still useful, these variables make it difficult to isolate the exact impact of the GPT_NOTE embeds on ChatGPT-driven traffic.

- Topics of Articles: The articles written during the test period were still created with search volume in mind, so they didn’t mirror the subjects of the legacy control posts. Because the topics varied, each article likely attracted different levels of organic interest, which may have influenced the final results.

- Post Recency: Trend-based content tends to see an initial burst of engagement that declines steeply over time. Because the legacy articles had already stabilized in traffic before the test began, comparing them directly to newly published posts introduced unavoidable timing differences in engagement and visibility.

- Article Age: Older posts generally benefit from stronger domain authority, backlinks, and established rankings. Newer posts, by comparison, often start at a visibility disadvantage.

- Varying Sources: GA4’s referral data doesn’t capture every large language model or AI platform explicitly. Other models besides ChatGPT may have interacted with the content differently, leading to untracked or misattributed visits.

- Measurement Lag: If ChatGPT’s indexing or web-scanning systems refresh less frequently than traditional search crawlers, it’s possible the embedded GPT_NOTEs weren’t processed during the 45-day test window, limiting any immediate impact.

See for Yourself

Below is a list of the articles included in both the control and test groups. They are not labeled, so you can feel free to check them out for yourself and try to pick which is which.

- How to Make a ‘Roast Me’ ChatGPT Video

- How to Translate YouTube Videos (Subbing and Dubbing)

- ChatGPT Image Prompt Guide: 13 Tips from 9 Months of Tests

- How to Reverse Video Search

- What it Takes to Make Viral AI Video Content (We Asked Bigfoot)

- How to Use the Sora AI Video Generator

- How to Add Accurate Subtitles to Veo 3 Google Gemini Videos

- 80% of People Prefer Video Subtitles. Here's How they Affect Engagement.

- Using ChatGPT as a Thumbnail Maker (for YouTube and More)

- How to Make a Shooting Star Meme Video or GIF

- What Fonts Does TikTok Use and How Can You Get Them?

- How to Get Closed Captions That Meet European Accessibility Act (EAA) Requirements

- How to Make AI Bigfoot Videos (and Similar Styles)

Once you've taken a look, check for the answers below.

Control vs Test Articles

Control Group

- How to Translate YouTube Videos (Subbing and Dubbing)

- 80% of People Prefer Video Subtitles. Here's How they Affect Engagement.

- How to Use the Sora AI Video Generator

- What Fonts Does TikTok Use and How Can You Get Them?

Test Group

- How to Get Closed Captions That Meet European Accessibility Act (EAA) Requirements

- How to Make a ‘Roast Me’ ChatGPT Video

- Using ChatGPT as a Thumbnail Maker (for YouTube and More)

- How to Add Accurate Subtitles to Veo 3 Google Gemini Videos

- How to Make a Shooting Star Meme Video or GIF

- ChatGPT Image Prompt Guide: 13 Tips from 9 Months of Tests

- What it Takes to Make Viral AI Video Content (We Asked Bigfoot)

- How to Make AI Bigfoot Videos (and Similar Styles)

- How to Reverse Video Search